云计算平台是非常巨大的分布式系统,需要处理庞大的处理请求,因此任何小概率事件在此平台中都必然发生。

DBMS强调ACID:原子性(Atomicity)、一致性(Consistency)、隔离性(Isolation)、持久性 (Durability)。其中的一致性强调当程序员定义的事务完成时,数据库处于一致的状态,如对于转帐来说,事务完成时必须是A少了多少钱B就多了多少钱。而对于很多互联网应用来说,对于一致性和隔离性的要求可以降低,而可用性(Availability)的要求则更为明显。从而产生了两种弱一致性的理论:BASE和CAP。

继续研究几篇论文

The Chubby lock service for loosely-coupled distributed systems

Paxos算法

Google咖啡因系统

Pregel计算模型

亚马逊dynamo系统

Facebook Cassandra

数据收集和存储的速度正在惊人地发展,对Google而言,数以万计的服务器中存储的PB级数据,以及每天在服务器中处理的数以亿计的图片文件,都对其未来的系统架构提出了新的挑战。重新设计系统架构以此优化搜索引擎的增量处理能力已是Google当务之急。

Updated As its custom-built file system strains under the weight of an online empire it was never designed to support, Google is brewing a replacement.

Apparently, this overhaul of the Google File System is already under test as part of the "Caffeine" infrastructure the company announced earlier this week.

zz from:

http://blog.csdn.net/AE86_FC/archive/2010/08/08/5796640.aspx

Abstract

许多实际应用问题中都涉及到大型的图算法。比如网页链接关系和社会关系图等。这些图都有相同的特点:规模超大,常常达到数十亿的顶点和上万亿的边。这么大的规模,给需要在其上进行高效计算的应用提出了巨大的难题。在这篇论文中,我们将提出一种适合处理这类问题的计算模式。将程序用一系列的迭代来描述(Programs are expressed as a sequence of iterations),在每一次迭代中,每一个顶点都能接收来自上一次迭代的信息,并将这些信息传送给下一个顶点,并在此过程中修改其自身的状态信息,以该顶点为起点的出边的状态信息,或改变整个图的拓扑结构。这种面向顶点的方法足够的灵活,可以用来描述一系列的算法。这种计算模式被设计的足够高效,可扩展,和足够的容错,并在有上千台的计算节点的集群中得以实现。这种模式中隐式的同步性(implied synchronicity)使得它对程序的确认变得简单。分布式相关的细节已经被一组抽象的API给隐藏。而展现给人们的仅仅是一个表现力很强,很容易编程的大型图算法处理的计算框架。

Keywords

分布式计算,图算法

1.Introducetion

Internet使得Web graph成为一个人们争相分析和研究的热门对象。Web 2.0更是将对社会关系网的关注推向高潮。同时还有其他的大型图对象(如交通路线图,报纸文献,疾病爆发路径,以及科学研究的发表文章中的引用关系等),也已经被研究了很多年了。同时也有了许多相应的应用算法,如最短路径算法,page rank理论演变而来的图相关算法等等。同时还有许多其他的图计算问题也有着相当的实际价值,如最小切割,以及连通分支等相关问题。

事实证明,高效的处理大型图对象的计算是一件极具挑战性的事情。图算法常常暴露出类似不高效的本地内存访问,针对每个顶点的处理过少,以及在计算过程中改变并行度等问题。分布式的介入更是加剧了locality的问题,并且加剧了在计算过程中机器发生故障而影响计算的可能性。尽管大型图形无处不在,其商业应用也非常普及,但是一种通用的,适合各种图算法的大型分布式环境的实现到目前还不存在。

要实现一种大型图计算的算法通常意味着要在以下几点中作出选择:

Spanner: Google s next Massive Storage and Computation infrastructure

MapReduce Bigtable and Pregel have their origins in Google and they all deal with large systems . But all of them may be dwarfed in size and complication by a new project Google is working on which was mentioned briefly (may be un-intentionally) at an event last year.

zz from:

http://horicky.blogspot.com/2010/10/scalable-system-design-patterns.html

Looking back after 2.5 years since my previous post on scalable system design techniques, I’ve observed an emergence of a set of commonly used design patterns. Here is my attempt to capture and share them.

Load Balancer

In this model, there is a dispatcher that determines which worker instance will handle the request based on different policies. The application should best be "stateless" so any worker instance can handle the request.

This pattern is deployed in almost every medium to large web site setup.

Scatter and Gather

In this model, the dispatcher multicast the request to all workers of the pool. Each worker will compute a local result and send it back to the dispatcher, who will consolidate them into a single response and then send back to the client.

This pattern is used in Search engines like Yahoo, Google to handle user’s keyword search request … etc.

Result Cache

In this model, the dispatcher will first lookup if the request has been made before and try to find the previous result to return, in order to save the actual execution.

This pattern is commonly used in large enterprise application. Memcached is a very commonly deployed cache server.

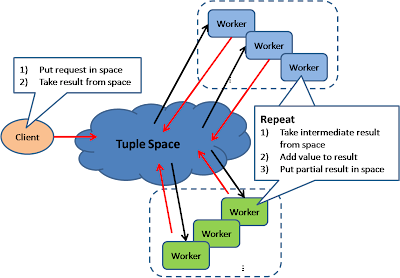

Shared Space

This model also known as "Blackboard"; all workers monitors information from the shared space and contributes partial knowledge back to the blackboard. The information is continuously enriched until a solution is reached.

This pattern is used in JavaSpace and also commercial product GigaSpace.

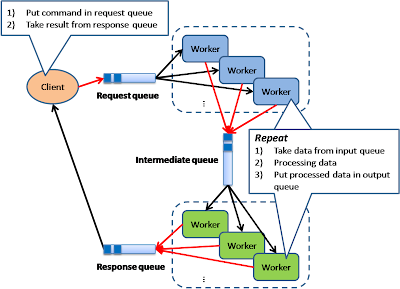

Pipe and Filter

This model is also known as "Data Flow Programming"; all workers connected by pipes where data is flow across.

This pattern is a very common EAI pattern.

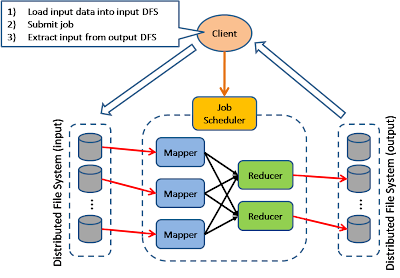

Map Reduce

The model is targeting batch jobs where disk I/O is the major bottleneck. It use a distributed file system so that disk I/O can be done in parallel.

This pattern is used in many of Google’s internal application, as well as implemented in open source Hadoop parallel processing framework. I also find this pattern can be used in many many application design scenarios.

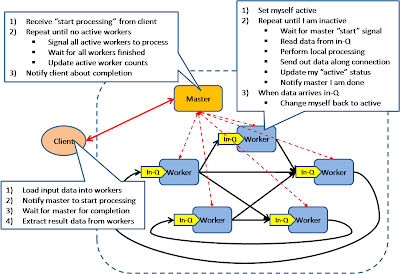

Bulk Synchronous Parellel

This model is based on lock-step execution across all workers, coordinated by a master. Each worker repeat the following steps until the exit condition is reached, when there is no more active workers.

Each worker read data from input queue

Each worker perform local processing based on the read data

Each worker push local result along its direct connection

This pattern has been used in Google’s Pregel graph processing model as well as the Apache Hama project.

Execution Orchestrator

This model is based on an intelligent scheduler / orchestrator to schedule ready-to-run tasks (based on a dependency graph) across a clusters of dumb workers.

This pattern is used in Microsoft’s Dryad project

Although I tried to cover the whole set of commonly used design pattern for building large scale system, I am sure I have missed some other important ones. Please drop me a comment and feedback.

Also, there is a whole set of scalability patterns around data tier that I haven’t covered here. This include some very basic patterns underlying NOSQL. And it worths to take a deep look at some leading implementations.

from:

After 25 years of dominance, relational databases and SQL have in recent years come under fire from the growing “NoSQL movement.” A key element of this movement is Hadoop, the open-source clone of Google’s internal MapReduce system. Whether it’s interpreted as “No SQL” or “Not Only SQL,” the message has been clear: If you have big data challenges, then your programming tool of choice should be Hadoop.

from: http://hi.baidu.com/makeittrue/blog/item/bb6ca4371b4941360b55a954.html

参考:http://wenku.baidu.com/view/0ea86ffdc8d376eeaeaa3198.html

select()系统调用提供一个机制来实现同步多元I/O:

#include <sys/time.h>

#include <sys/types.h>

#include <unistd.h>

int select (int n,

fd_set *readfds,

fd_set *writefds,

fd_set *exceptfds,

struct timeval *timeout);

FD_CLR(int fd, fd_set *set);

FD_ISSET(int fd, fd_set *set);

FD_SET(int fd, fd_set *set);

FD_ZERO(fd_set *set);